High-quality datasets are essential for training and validating the algorithms that enable self-driving cars to perceive and navigate their environments.

These datasets provide the vast data required to teach machines to recognize objects, predict movements, and make driving decisions.

This article explores the best datasets for autonomous vehicle research, their features, applications, and importance.

Importance of Datasets in Autonomous Vehicle Research

Datasets are crucial for several reasons:

- Training Algorithms: Large and diverse datasets are necessary to train machine learning models to recognize objects and scenarios.

- Validation and Testing: Datasets are used to validate and test the performance of autonomous driving systems in different environments and conditions.

- Benchmarking: They provide a standard for comparing different algorithms and systems.

- Continuous Improvement: Regularly updated datasets help improve the robustness and accuracy of autonomous driving systems.

Key Features of Good Autonomous Vehicle Datasets

A good dataset for autonomous vehicle research should have the following features:

- Diversity should include various driving scenarios, weather conditions, and geographic locations.

- High-Quality Annotations: Accurate and detailed annotations are essential for training effective models.

- Multimodal Data: Combining data from sensors such as cameras, lidar, and radar enhances the understanding of the environment.

- Scalability: The dataset should be large enough to train complex models and manageable for practical use.

Top Datasets for Autonomous Vehicle Research

Here are some of the best datasets available for autonomous vehicle research, each offering unique features and benefits.

1. KITTI Vision Benchmark Suite

The KITTI Vision Benchmark Suite is one of the most widely used datasets in autonomous driving research. It provides a comprehensive collection of data captured from vehicles driving in and around Karlsruhe, Germany.

Features:

- Sensor Data: Includes stereo camera images, lidar point clouds, and GPS/IMU data.

- Annotations: Provides 3D bounding boxes, tracking annotations, and semantic segmentation.

- Diverse Scenarios: Covers urban, rural, and highway environments.

2. Waymo Open Dataset

Waymo, a leader in autonomous driving technology, releases the Waymo Open Dataset. This extensive dataset includes data from multiple cities in the United States.

Features:

- Sensor Data: Contains high-resolution images, lidar point clouds, and contextual information.

- Annotations: Detailed annotations for object detection, tracking, and segmentation.

- Geographic Diversity: Captured from a variety of urban and suburban locations.

You can download Wymo Open Dasatset Here.

3. nuScenes Dataset

The nuScenes Dataset by Aptiv is a large-scale dataset that covers a wide range of driving scenarios. It is mainly known for its detailed annotations and sensor fusion capabilities.

Features:

- Sensor Data: Includes images from six cameras, lidar, radar, and GPS/IMU data.

- Annotations: Rich annotations for object detection, tracking, and map information.

- Complex Scenarios: Captures diverse and complex urban driving scenarios.

4. ApolloScape Dataset

Baidu’s Apollo project provides the ApolloScape Dataset. It supports various tasks such as lane detection, 3D car instance understanding, and semantic segmentation.

Features:

- Sensor Data: Offers data from multiple cameras, lidar, and GPS.

- Annotations: Includes annotations for 3D car instances, lanes, and road scenes.

- Wide Coverage: Collected from different cities and under various weather conditions.

5. Argoverse Dataset

Argo AI provides the Argoverse Dataset and includes detailed data for autonomous driving research, mainly focusing on motion forecasting and 3D tracking.

Features:

- Sensor Data: Contains data from cameras, lidar, and high-definition maps.

- Annotations: Rich annotations for object tracking, lane centerlines, and driveable areas.

- Motion Forecasting: Includes annotations for vehicle motion forecasting.

6. BDD100K Dataset

The BDD100K Dataset by UC Berkeley is one of the largest and most diverse autonomous driving datasets, featuring a wide range of driving scenarios and conditions.

Features:

- Sensor Data: Includes images from front-facing cameras.

- Annotations: Provides annotations for object detection, lane markings, and drivable areas.

- Diverse Conditions: Captures data from different times of day, weather conditions, and geographic locations.

7. Cityscapes Dataset

The Cityscapes Dataset focuses on a semantic understanding of urban street scenes. It is beneficial for tasks like semantic segmentation and object detection.

Features:

- Sensor Data: High-resolution images from forward-facing cameras.

- Annotations: Detailed annotations for semantic segmentation, instance segmentation, and object detection.

- Urban Focus: Primarily captures urban street scenes from multiple European cities.

Table 1: Comparison of Top Autonomous Vehicle Datasets

| Dataset | Sensor Data | Annotations | Key Features |

|---|---|---|---|

| KITTI | Stereo cameras, lidar, GPS/IMU | 3D bounding boxes, tracking, segmentation | Diverse scenarios, comprehensive annotations |

| Waymo Open Dataset | High-resolution images, lidar, context | Object detection, tracking, segmentation | Geographic diversity, extensive data |

| nuScenes | Cameras, lidar, radar, GPS/IMU | Object detection, tracking, map info | Complex urban scenarios, sensor fusion |

| ApolloScape | Cameras, lidar, GPS | 3D car instances, lanes, road scenes | Wide coverage, various weather conditions |

| Argoverse | Cameras, lidar, HD maps | Object tracking, lane centerlines, motion | Motion forecasting, detailed tracking |

| BDD100K | Front-facing cameras | Object detection, lane markings, drivable areas | Large and diverse, various conditions |

| Cityscapes | High-resolution forward-facing cameras | Semantic segmentation, instance segmentation | Urban focus, high-quality annotations |

Applications of Autonomous Vehicle Datasets

Autonomous vehicle datasets are used in various applications, each critical for developing and refining self-driving technology.

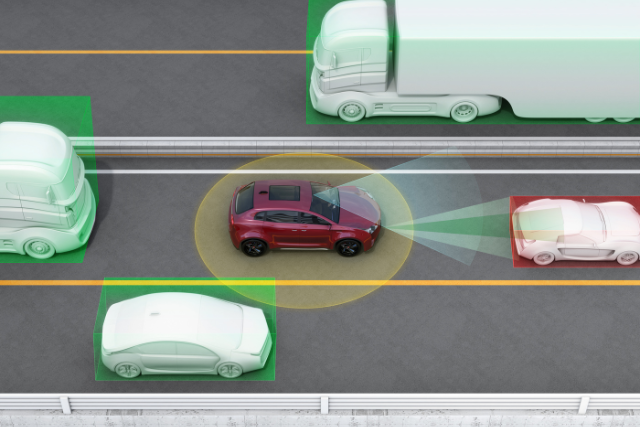

Object Detection and Classification

Datasets with detailed annotations for objects (e.g., vehicles, pedestrians, cyclists) are essential for training models to recognize and classify different environmental entities. This is a fundamental capability for autonomous cars to navigate safely.

Lane Detection and Road Segmentation

Datasets that provide lane markings and road segmentation annotations help develop lane-keeping, lane-changing, and path-planning algorithms. Accurate lane detection is crucial for maintaining proper vehicle positioning on the road.

Motion Forecasting and Path Prediction

Datasets that include motion forecasting annotations enable the development of models that predict the future trajectories of surrounding objects. This is important for collision avoidance and planning safe manoeuvres.

Sensor Fusion

Datasets with multimodal data (cameras, lidar, radar) allow researchers to develop and refine sensor fusion techniques. Combining data from multiple sensors provides a more comprehensive understanding of the vehicle’s surroundings.

Traffic Sign and Signal Recognition

Datasets that include traffic signs and signal annotations are used to train models for recognizing and interpreting traffic control devices. This capability is essential for obeying traffic laws and ensuring safe driving behaviour.

Mapping and Localization

High-definition map data included in some datasets is used for developing algorithms that localize the vehicle within a map. Accurate localization is vital for navigation and executing planned routes.

Challenges in Using Autonomous Vehicle Datasets

While autonomous vehicle datasets provide invaluable resources for research, they also come with several challenges.

Data Volume and Management

The sheer volume of data in these datasets can be overwhelming. Efficient data management and processing pipelines are necessary to handle large datasets effectively.

Annotation Quality and Consistency

The accuracy and consistency of annotations are crucial for training reliable models. Inconsistent or erroneous annotations can lead to poor model performance.

Sensor Calibration and Synchronization

Ensuring that sensor data is calibrated and synchronized is essential for effective sensor fusion and accurate environment perception.

Diverse Driving Conditions

Datasets must cover various driving conditions (e.g., different weather, lighting, and traffic scenarios) to ensure robust and generalizable models.

Legal and Ethical Considerations

Using and sharing autonomous vehicle data involves navigating legal and ethical issues, including privacy concerns and regulatory compliance.

Future Trends in Autonomous Vehicle Datasets

The field of autonomous vehicle research is continually evolving, and future trends in datasets reflect this dynamic environment.

Increased Dataset Diversity

Future datasets will likely include more diverse driving conditions, geographic locations, and scenarios to improve model robustness and generalization.

Real-Time Data Streaming

The development of real-time data streaming capabilities will enable researchers to access and utilize live data for training and testing, enhancing the relevance and applicability of their models.

Enhanced Sensor Technology

As sensor technology advances, future datasets will incorporate data from more sophisticated sensors, providing higher resolution and accuracy.

Synthetic Data Generation

Synthetic data generated through simulation can supplement real-world datasets, providing additional scenarios and conditions that are difficult to capture in the real world.

Collaborative Data Sharing

Increased collaboration between research institutions, automotive companies, and regulatory bodies will promote data sharing and standardization, accelerating the development of autonomous driving technology.

FAQs

1. What are autonomous vehicle datasets used for?

Autonomous vehicle datasets train, validate, and test the algorithms and machine learning models that enable autonomous driving. These datasets provide the necessary data for object detection, lane detection, motion forecasting, sensor fusion, traffic sign recognition, and mapping.

2. Why is diversity important in autonomous vehicle datasets?

Diversity in autonomous vehicle datasets is crucial because it ensures that the models trained on them can handle various driving scenarios, weather conditions, and geographic locations. This helps develop robust and generalizable models that perform well in various real-world situations.

3. What is sensor fusion in the context of autonomous vehicles?

Sensor fusion in autonomous vehicles refers to combining data from multiple sensors (such as cameras, lidar, and radar) to create a comprehensive understanding of the vehicle’s environment. This approach enhances perception systems’ accuracy and reliability by leveraging different sensors’ strengths.

4. How do autonomous vehicle datasets support motion forecasting?

Autonomous vehicle datasets that include annotations for motion forecasting provide data on the trajectories of surrounding objects. This information is used to train models that predict the future movements of vehicles, pedestrians, and other entities, enabling the autonomous system to plan safe and effective manoeuvres.

5. What are the challenges of using large-scale autonomous vehicle datasets?

Challenges of using large-scale autonomous vehicle datasets include managing and processing large volumes of data, ensuring annotation quality and consistency, calibrating and synchronizing sensor data, covering diverse driving conditions, and addressing legal and ethical considerations related to data use and sharing.

6. What is the future of autonomous vehicle datasets?

The future of autonomous vehicle datasets includes increased diversity, real-time data streaming capabilities, enhanced sensor technology, synthetic data generation, and collaborative data sharing. These advancements will help accelerate the development and deployment of autonomous driving technologies.

Conclusion

Autonomous vehicle datasets play a pivotal role in developing and advancing self-driving technology. They provide the foundational data necessary for training, validating, and testing the algorithms that enable vehicles to perceive and navigate their environments safely and efficiently.

The best datasets, such as KITTI, Waymo Open Dataset, nuScenes, ApolloScape, Argoverse, BDD100K, and Cityscapes, offer diverse, high-quality, and multimodal data that address various aspects of autonomous driving.

Future trends include greater diversity, real-time data capabilities, enhanced sensor technology, synthetic data generation, and collaborative efforts in data sharing.

These advancements will help overcome current challenges and drive the progress of autonomous vehicle technology, ultimately leading to safer, more efficient, and more reliable autonomous driving systems.